I looked for a simple long wood shelf to fit under my projector screen, but there didn't seem to be anything commercially available that fit my needs, so I built my own.

|

| Gluing boards edge-to-edge |

|

| Planing them flat |

|

| Glued boards |

|

| Cutting end pieces |

|

| Drilling pocket holes |

|

| Gluing and screwing the pocket holes |

|

| Half done with pocket holes |

|

| Filling in holes |

|

| Half-lap jointed center brace |

|

| Staining |

|

| Complete |

My solution was to build a streaming game server. There are a couple of commercial products out there - OnLive, GaiKai, NVidia GameStream, and Steam, but they all have their own limitations that don't work for me.

- OnLive and GaiKai are cloud hosted and require modification to the game; they're also proprietary.

- NVidia GameStream works with PC games and only officially supports LAN streaming to the NVidia Shield. There are also some compatiblity issues still. Project Limelight is an open source client for GameStream that I had mixed success with.

- Steam streaming only works with PC games on LAN and also has compatiblity issues.

All of these solutions are based on low latency H264 streaming, which trades off some latency of better compression efficiency. They also generally require a custom client on your target platform to work.

My first few attempts followed a similar strategy. I tried to get various H264 encoders to stream with a low latency by doing only I frames. The results weren't great.

- Latency was high and any hiccup in the stream would be compounded as the encoders weren't able to drop frames based off the client's streaming state.

- Commonly available H264 web controls do not deal well with very small buffers. They'll typically buffer a second or more of video before beginning playback; the ones that don't would hitch as it attempted to buffer.

- MP4 containers aren't designed for streaming. Unreliable streams will break an MP4 stream with no mode for recovery. MPEG2 containers can recover, but support isn't good (this is what MPEG-DASH is based off of)

I ended up settling on writing my own web server that serves a variant of MJPEG. Writing my own server allows me to know the state of the client directly so it can properly drop frames to maintain low latency.

I took a similar approach with audio. Temporally, audio is more sensitive to latency and gapping, so I avoided any form of compression to prevent audio codecs from buffering. I use the web audio api and stream my audio raw. This also meant writing my own downsampling and filtering code for audio.

Solving the video and audio streaming was only part of the problem. How would I control the consoles? I ended up implementing the protocols for the DualShock 3, DualShock 2, SNES, and NES controllers using Arduinos.

- The DualShock 3 controller is mostly taken from GIMX. I modified the serial protocol to account for unreliable serial communications - GIMX assumes a wired connection, I'm using Bluetooth.

- The DualShock 2 controller code was implemented using the Arduino as an SPI slave and using the documentation from here. This is currently implemented as a wired protocol and seems the most reliable.

- The NES and SNES controller code was implemented using Arduino interrupts triggered by the latch and clock lines from the NES and SNES. These controllers are a little iffy though - my suspicion is that the interrupt handling is chewing up all the CPU time. I read somewhere that entering an interrupt takes about 4us - the clock rate of the NES controller is 12us. These two are also implemented using Bluetooth, but I may switch them out to wired later.

Most systems contemporary to these controllers can be adapted to be used in other systems; that is, the DualShock 2 can be adapted to the DreamCast and the DualShock 3 can be adapted to the 360. Anything older than the Playstation 2 generation of consoles generally has a relatively simple to implement protocol.

Now that I can control the consoles via PC, I needed to be able to power them on and off.

- For older consoles that have a physical power switch, I modified a cheap wireless power outlet controller. The remote on this controller likely uses a 315 MHz transmitter or some such, but instead of reverse engineering that, I just hacked the buttons themselves with a series of simple transistor inverters. I use this to control the power outlets for the NES and Dreamcast.

- For the other consoles, I use a USBUirt. Their is an api provided that I use to directly send IR commands to the PS2 and XBox 360. The PS3 uses Bluetooth remotes, so I got a PS3-IR. As an added bonus, this allows me to control an OTA HD set top box.

I also wanted to be able to use my consoles without having to have the game console server up, so I wired it up like so:

Consoles -> HDMI Switch -> HDMI Splitter -> Home Theater Receiver and Web Server

The game console server can control the HDMI switch via RS232. This allows me to watch TV or play games without having the server in the loop and vice versa - the receiver doesn't need to be powered to play games over the web.

Each console needs to be converted to HDMI for this set up to work, so I had to get small converter boxes for the Dreamcast (VGA), PS2 (component), and NES (composite). These are relatively cheap, but getting one that actually works right can be a matter of luck.

As for the software architecture, being able to control so many devices needed a relatively modular design. I ended up designing it like so

- A piece of hardware (real or virtual) is a 'device'

- There are specialized devices

- Gamepads - controllers

- Video - Video and audio sources

- Terminals - Video and audio sinks

- Commands - Singular commands to be executed

- A group of devices is a 'system'

- Systems have one video device and any number of gamepads and commands

- Systems are managed by a 'switcher'

- This will allow switching between inputs and managing commands

- The switcher will route the active system's input and output between all the active 'Terminals'

- Video and audio is 'multicast' to each terminal for it to figure what to do with it

- Terminals can send gamepad input, commands, or input switch events back to the system.

With this sort of design I could do pretty much anything within reason. For example, if I had a computer controlled led lighting system, I could set it up a command to turn the lights green when I switched to the XBox.

On the terminal side, I currently have two terminals.

- Local terminal - this displays the audio and video on the server directly and accepts gamepad and command inputs from it directly.

- Web server terminal - this terminal runs a web server and manages all incoming http clients as well as performs encoding on a per client basis. This means that two people could be connected with different video and audio settings.

The terminals could allow you to do anything else though - you could write a computer vision system to play Guitar Hero or Tetris pretty trivially.

The web app is still quite primitive since I'm learning Javascript as I go. Currently it supports on the fly video and audio setting changes, touch screen input, keyboard input, and gamepad input, provided the web browser supports the necessary apis - web sockets, web audio, and gamepad.

Currently this means

- FireFox, Chrome, and Safari work with all features

- IE has no sound or gamepad support.

- Opera is a reskinned Chrome these days, so it works too.

- Android 4.4 works. Anything older than 4.4 will not work.

- Mobile Safari works.

- Mobile IE works without sound.

- 3DS and Vita browsers do not work.

The mobile platforms do not work very well since their screens are way too small to manage a modern console's controller via touch screen. A tablet should work, but none of the tablet browsers support 'full screen mode', so it gets messy when touching and dragging around - the browsers tend to accidentally scroll.

Wireless is also an issue - connections are never as stable as this kind of application requires. On wired gigabit lan, the ping varies between 7ms and 15ms for 720p 60fps video with 44.1khz 16-bit stereo PCM. The latency divide appears to be ~6ms for encode and 1ms - 9ms for a full transmit and acknowledge.

This means that with wired connections on the client, games can be 'playable' quite a ways away - I was clocking 100 ms - 110 ms between each coast of the US, which is on the edge of being playable. I was able to play some two player NES with a friend halfway across the country this way.

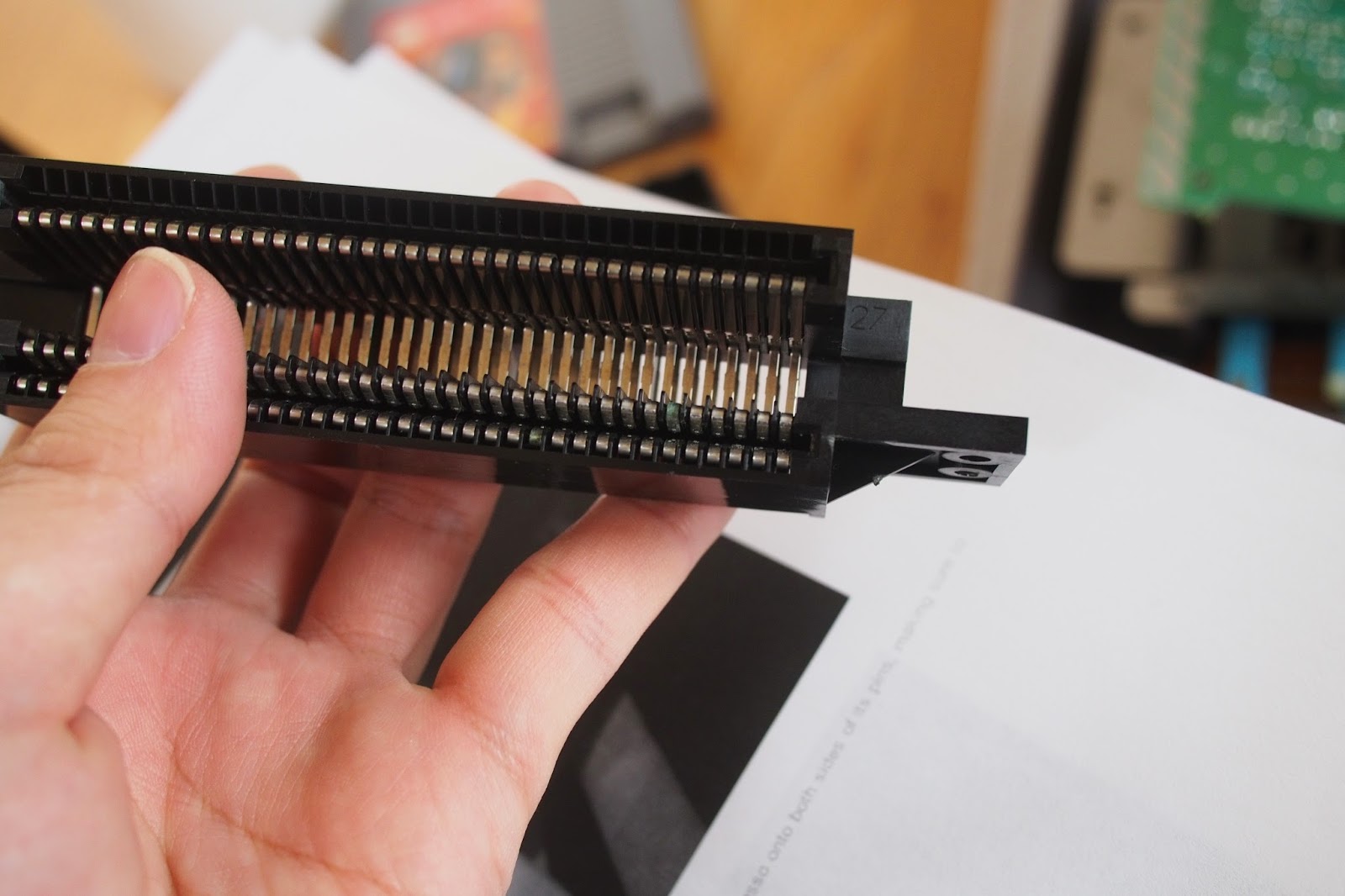

Early Attempts at pad hacking a 360 controller - this worked, but was abandoned for the DualShock 3 on Arduino - pad hacking is way too prone to error.

Fixing Up An NES - tightening the ZIF socket, cleaning connectors, removing corrosion. Don't bother with rubbing alcohol when cleaning NES cart connectors - alcohol doesn't do much to the corrosion. Use Brasso. I gave my Dreamcast the same treatment as well. It was randomly rebooting, so I took it apart and cleaned up the internal power connectors.

Here are some videos of it in action - I seem to be getting some interference on the two DualShock 3 based controllers in these videos (the 360 and the PS3) - it normally works fine, but Murphy's Law of Demos is a constant. Also, I'm really bad at Contra.

Trimmed a little in the middle where I got interrupted. I think my PS3 is defective - it's way too loud.

This video was captured using a Chrome extension - Screencastify. It's limited to 25fps, but the video has a significantly higher framerate.

|

| Final Result |